In a world of powerful AI tools, it’s tempting to believe that good results come just by asking. But the truth? How you ask still makes all the difference.

At Toru, we work with GPT models every day…training them, testing them, and turning them into usable tools for brands. And we’ve seen one thing over and over: even the best AI performs better when the prompt is clear, structured, and focused.

We’ve used prompt systems to reduce rewrite time by 60%, deliver consistent AR scripts across multilingual installations, and power real-time brand-aligned content engines.

Here’s the exact framework we use to do it.

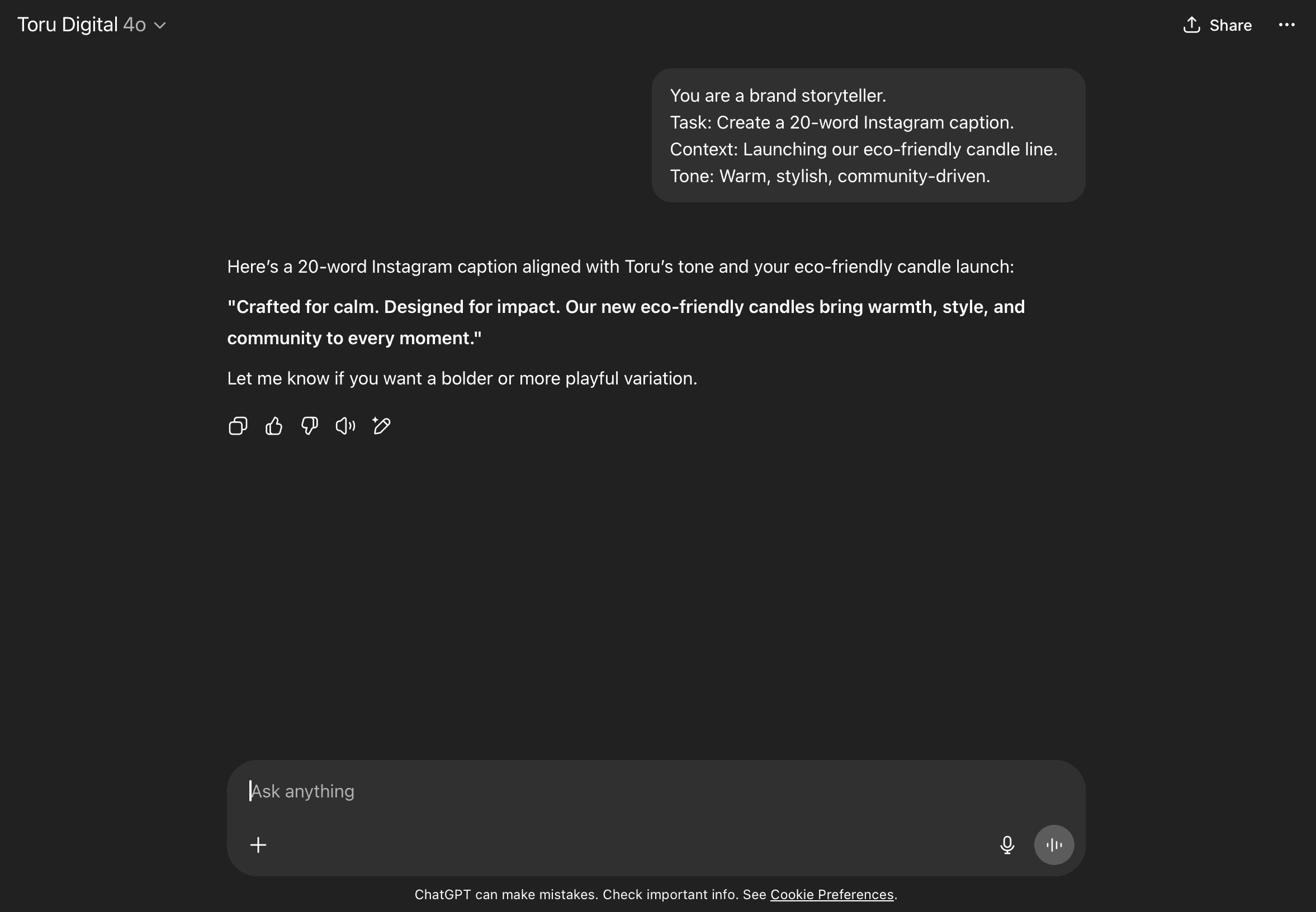

1. Set the Role, Set the Scene

Even the best GPT needs to know who it’s being and what it’s doing. Vague prompts lead to vague outputs. Every task should begin with clarity on:

- The role it’s playing

- The context it’s writing for

- The tone and audience it should target

Instead of: “Write a post about our new product.”

Use: “As a product marketer at a SaaS company, write a LinkedIn post introducing a new feature for enterprise teams. Keep the tone clear, direct, and benefit-led.”

We embedded this logic into ToruGPT, our internal AI assistant that helps generate brand-aligned copy for proposals, case studies, and blogs. Defining role + task up front cut briefing errors by over 40%.

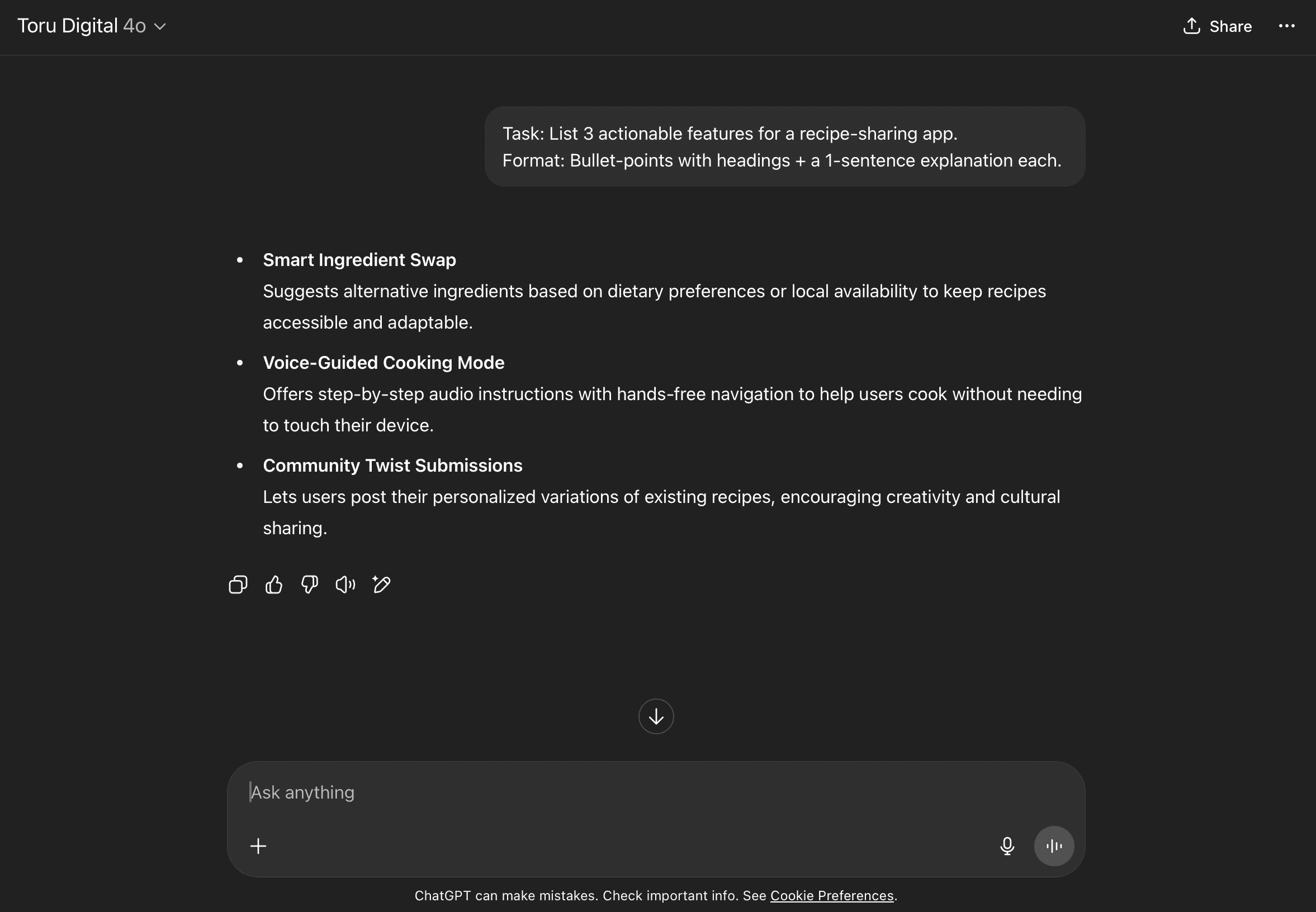

2. Structure for Repeatability

Think of prompts like design systems. When you format consistently, quality becomes scalable. We use structured “tags” in XML-style syntax to signal intent:

<role>Digital strategist</role>

<task>Write a 25-word homepage hook</task>

<tone>Confident, benefit-driven</tone>

<dont_include>Jargon or buzzwords</dont_include>

This approach powered our content automation workflow and reduced rewrite cycles by 60%.

3. Train the Model with Examples

AI learns patterns. If you want consistency, you have to show it.

In one internal tool, we created a dataset of input/output pairs from past campaigns. By training GPT on these brand-specific transformations, we enabled team-wide consistency across formats like sales decks, value propositions, and UI copy.

Prompt: “Write a headline for a new AI dashboard.”

Example Output: “Meet the Control Hub: real-time insights, custom alerts, and one dashboard for every decision.”

We implemented this structure for Reza City, an AI prototype for rapid image generation. With examples, we guided style, emotion and subject with precision, cutting trial/error time in half and producing outputs that matched creative intent in fewer iterations.

4. Don’t Stack, Sequence

Trying to generate an entire campaign in one go? You’ll likely end up with unfocused or inconsistent outputs.

Instead, break it down into a series of modular prompts:

Prompt 1: Write the campaign hook

Prompt 2: Expand into a landing page summary

Prompt 3: Generate two CTA options in specific tone

We used this sequencing model for Vanishing Man, an interactive storytelling experience blending AR, location data, and narrative scripts. Prompting scene-by-scene allowed us to fine-tune tone, tension and pacing , hence,ensuring historical accuracy and emotional resonance throughout.

5. Say What NOT to Do

AI needs boundaries as much as direction. Don’t leave room for guesswork.

Every good prompt should include a few red lines:

- No emojis

- Avoid filler phrases like “in today’s fast-paced world…”

- Use active voice and plain English

We built these into our GPT-driven briefing tool for Diversey Hub, helping content creators maintain tone integrity across multilingual training materials and product guides.

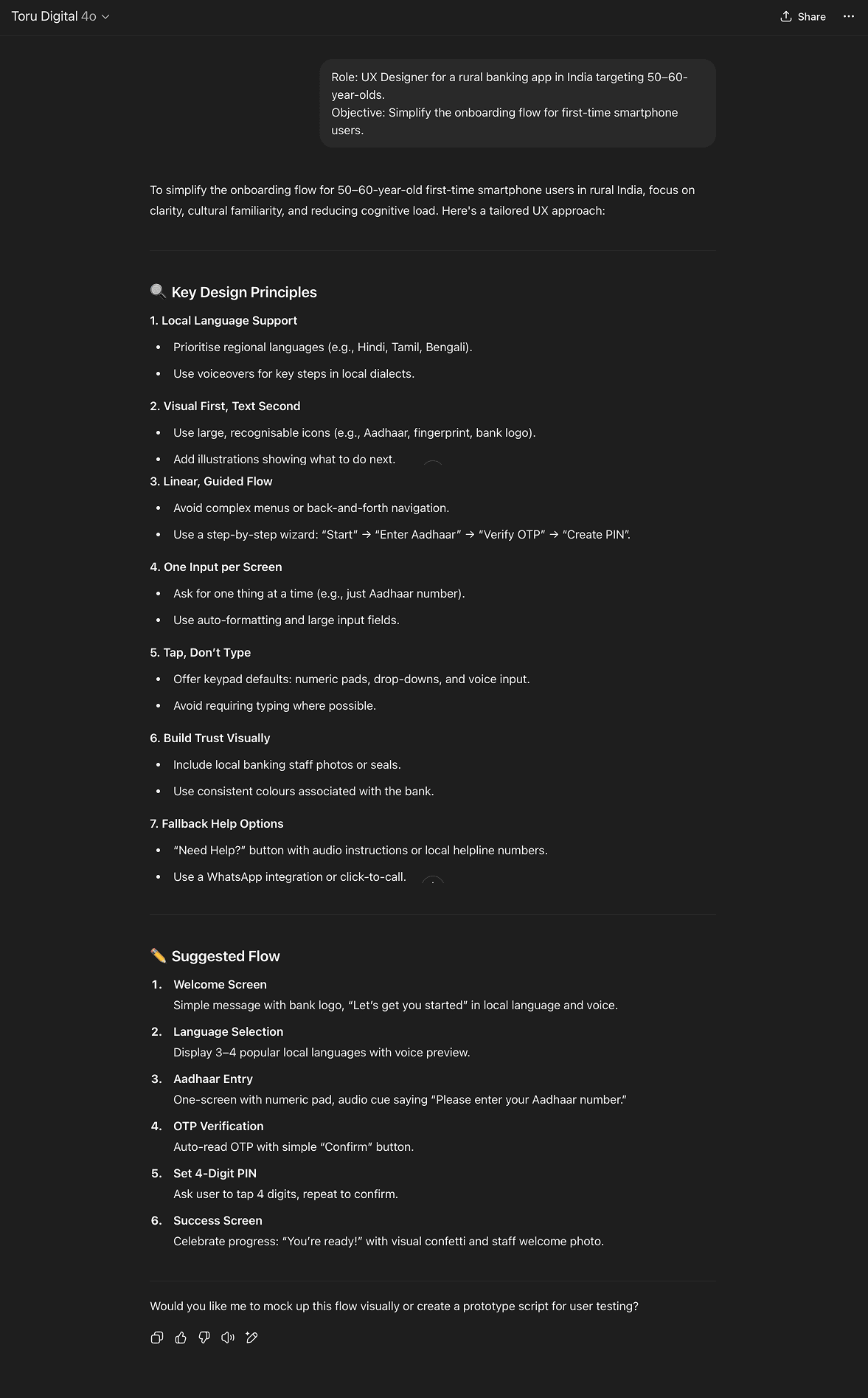

6. Instruction Trumps Style

This isn’t about sounding smart. It’s about being specific.

When prompting, clarity always beats cleverness. That means shorter sentences, directive phrasing, and exact requests. Think of it like briefing a junior creative,not asking a genius to guess.

Bad: “Write something catchy about our product.”

Better: “Write a 20-word intro that highlights speed and security. Keep it punchy, no metaphors, and no superlatives.”

We use this rule internally when briefing prompts for our strategy tools,where tone precision affects how stakeholders perceive positioning.

Better Prompts = Smarter Systems

We don’t prompt for fun,we prompt for outcomes.

At Toru, Prompt engineering isn’t a technical chore, it's a creative strategy. It’s how we take control of tone, quality, and consistency when AI is doing the heavy lifting.

And the payoff? Faster content, fewer rewrites, stronger alignment, and a GPT that genuinely sounds like you.

Want to scale high-quality content or streamline internal workflows with AI?

We’ll show you how smarter prompts make it possible.